Services

Products Warranty

Payment Methods

Shipping and Delivery

Return Policy

Privacy and Cookies

Get In Touch

WhatsApp: +86 185 0273 2643

Email: [email protected]

Address: No. 6885, Unit 1, Building 1, No. 33 Guangshun North Street, Chaoyang District, Beijing, China

English

English

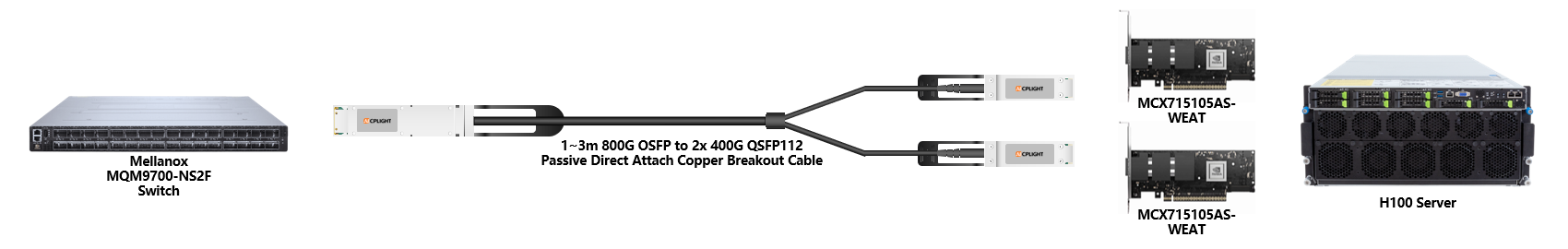

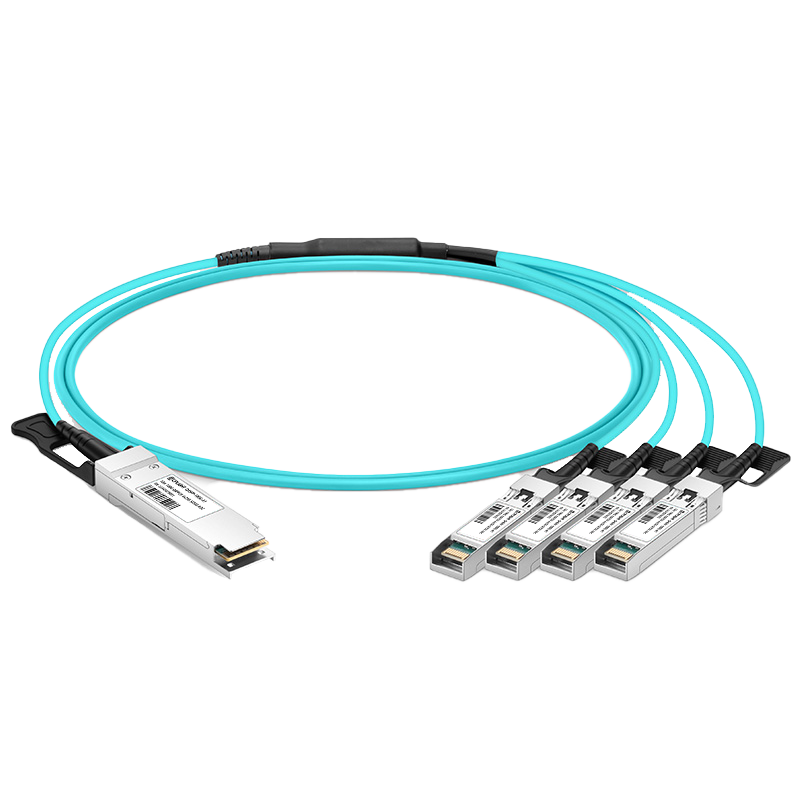

Used in our GPU training pod (8x H100s) to connect each server node. Latency remained sub-microsecond, even with large tensor workloads.