Services

Products Warranty

Payment Methods

Shipping and Delivery

Return Policy

Privacy and Cookies

Get In Touch

WhatsApp: +86 185 0273 2643

Email: [email protected]

Address: No. 6885, Unit 1, Building 1, No. 33 Guangshun North Street, Chaoyang District, Beijing, China

English

English

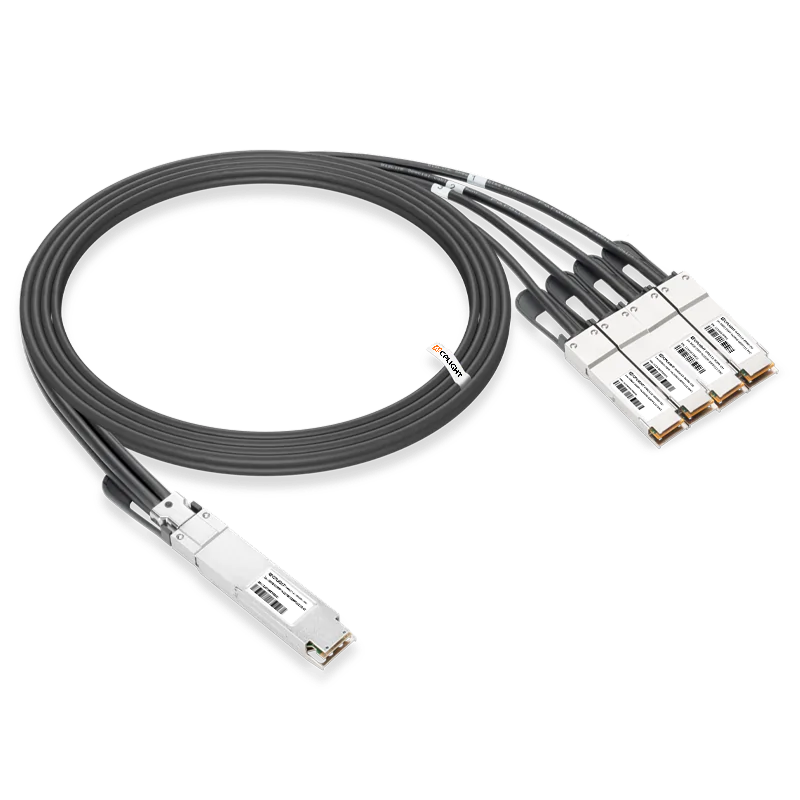

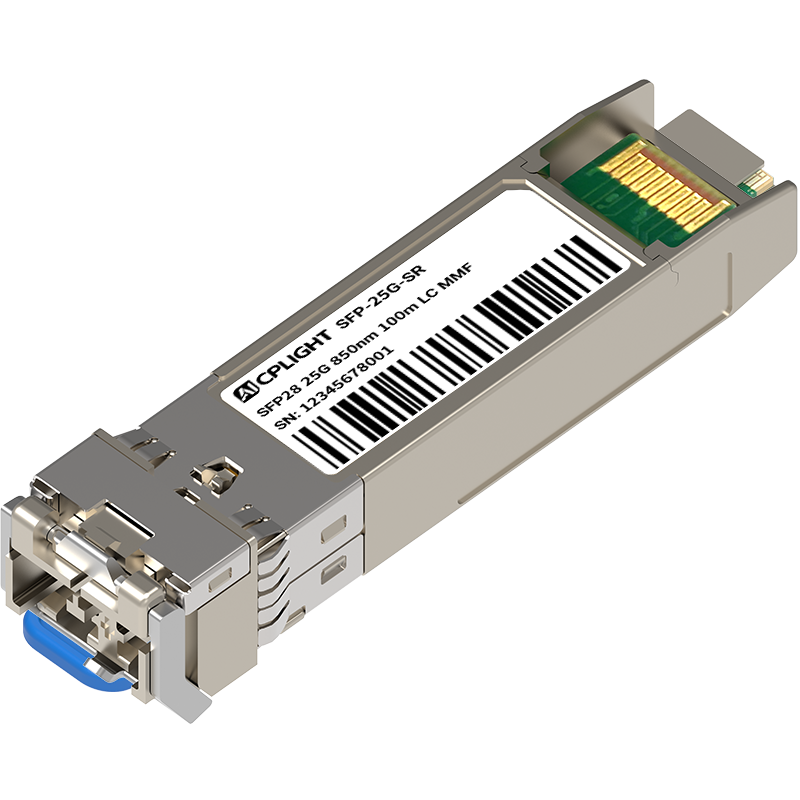

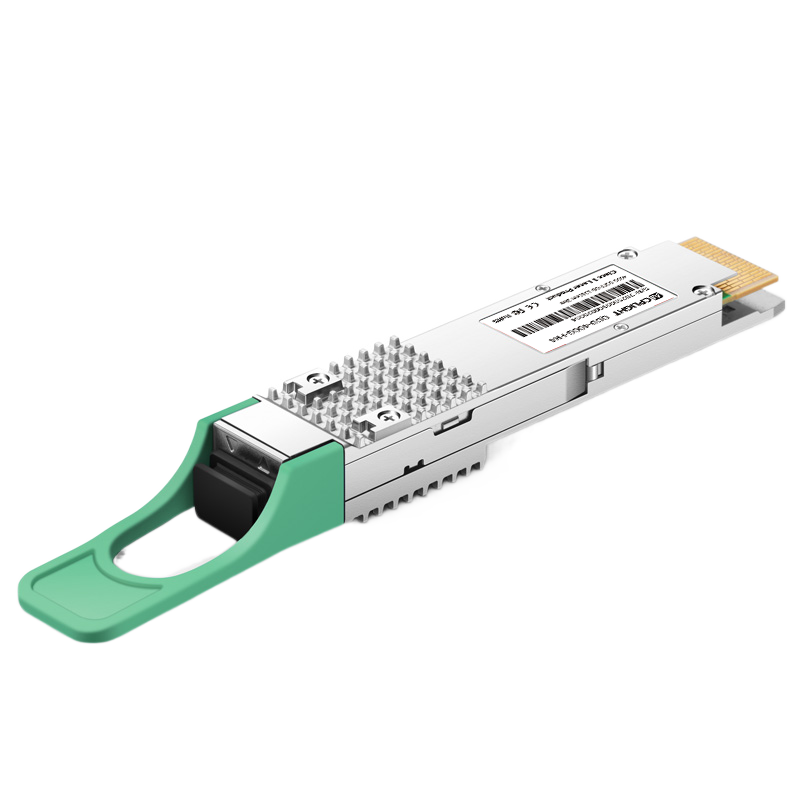

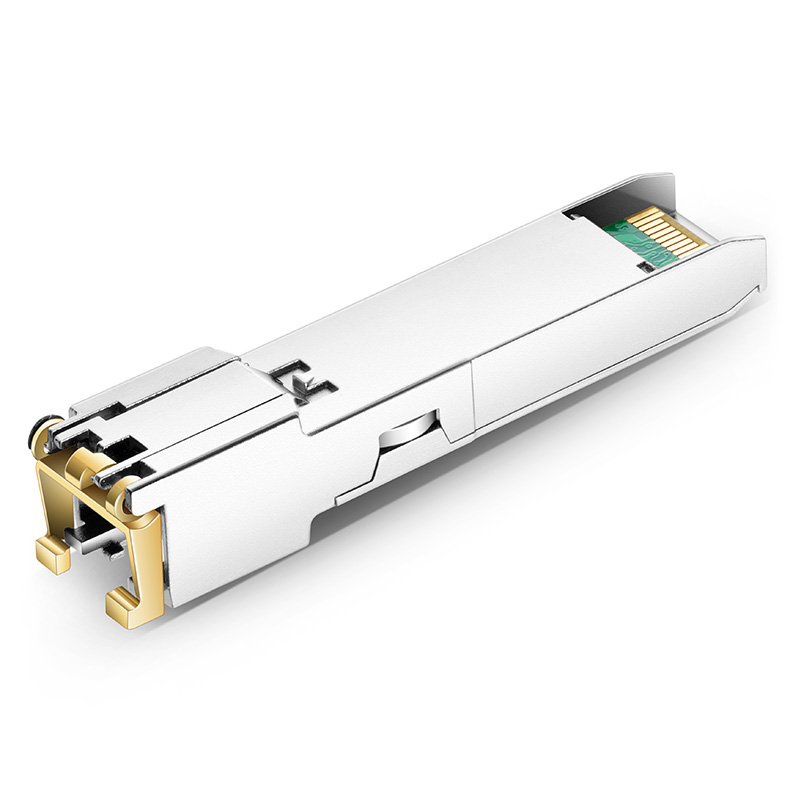

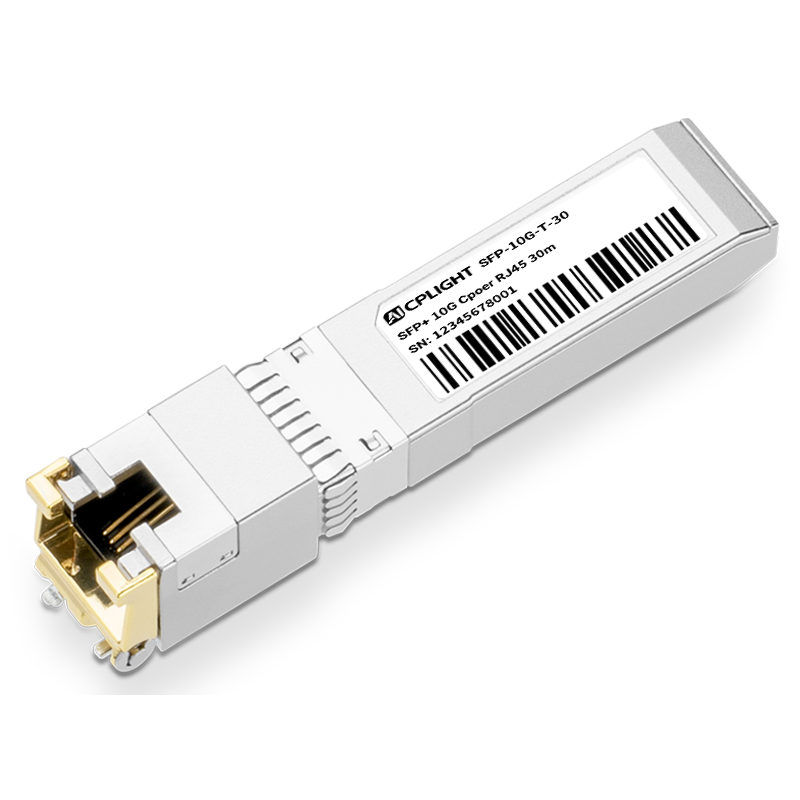

The connectors are solid, and the shielding is robust. No damage or signal degradation even after multiple insertions.