Services

Products Warranty

Payment Methods

Shipping and Delivery

Return Policy

Privacy and Cookies

Get In Touch

WhatsApp: +86 185 0273 2643

Email: [email protected]

Address: No. 6885, Unit 1, Building 1, No. 33 Guangshun North Street, Chaoyang District, Beijing, China

English

English

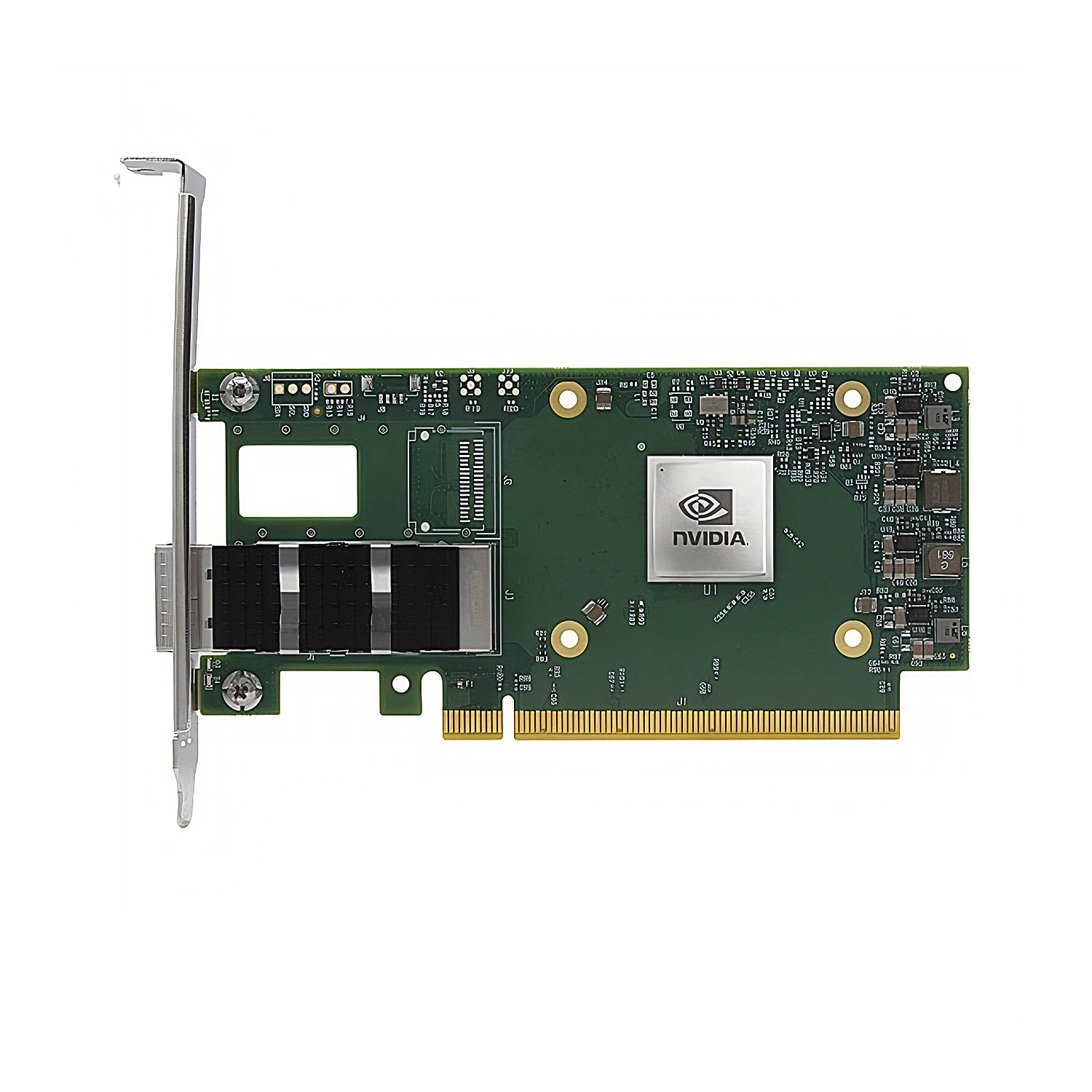

We were able to seamlessly scale our InfiniBand fabric using NVIDIA’s modular switch design. Adding more compute nodes was straightforward and non-disruptive.